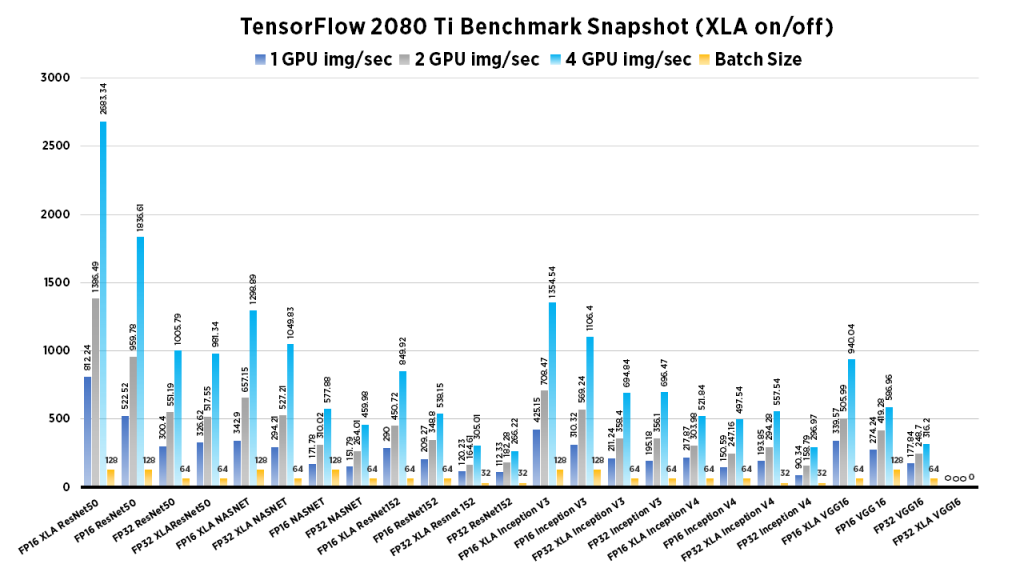

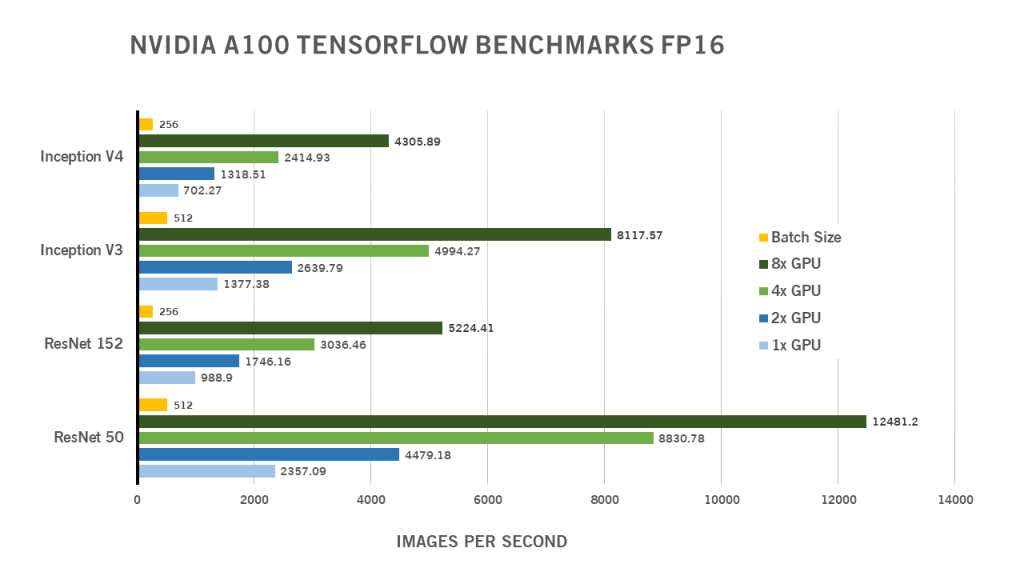

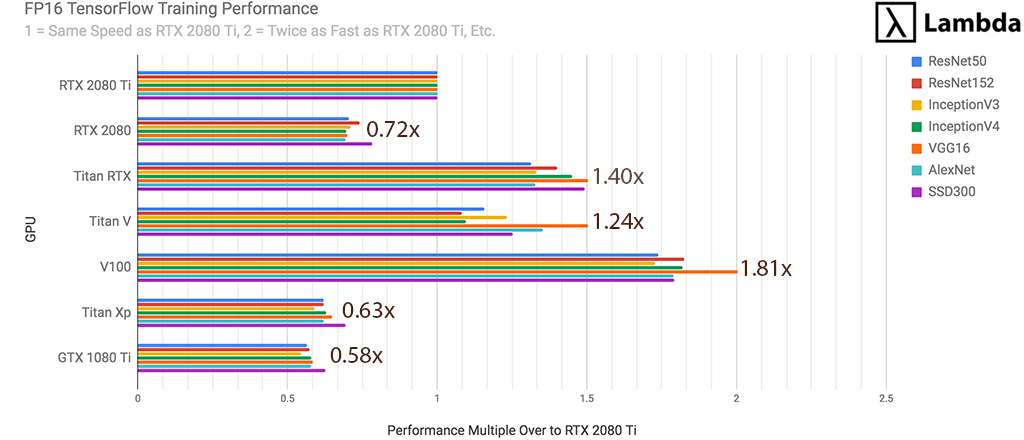

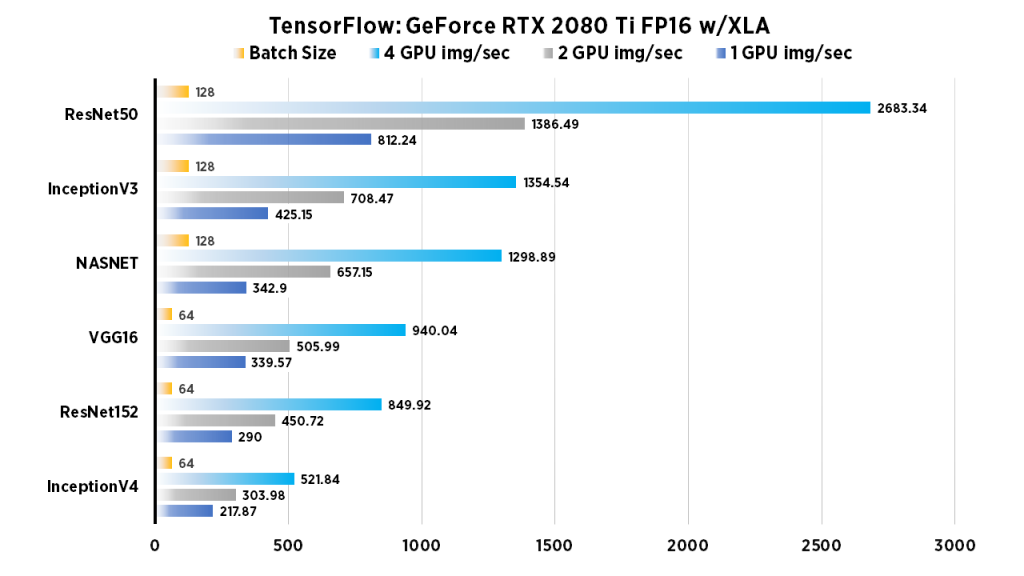

NVIDIA RTX 2080 Ti Benchmarks for Deep Learning with TensorFlow: Updated with XLA & FP16 | Exxact Blog

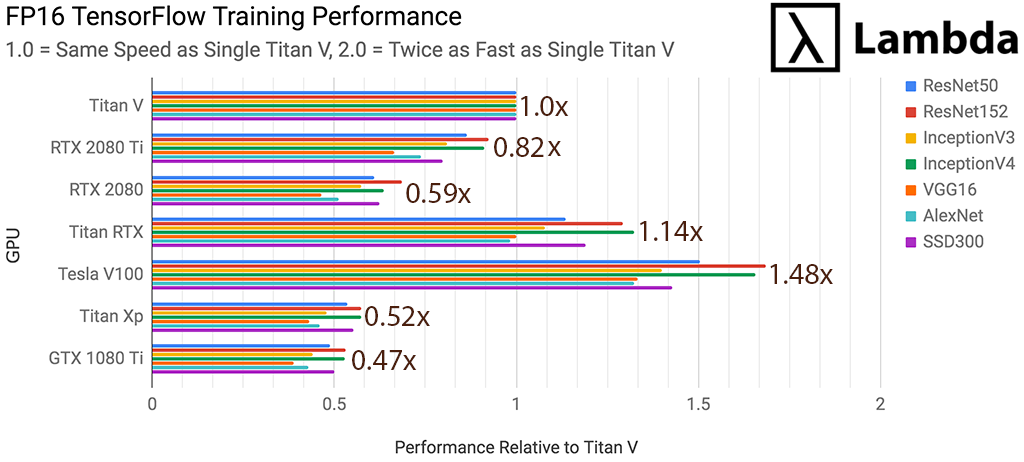

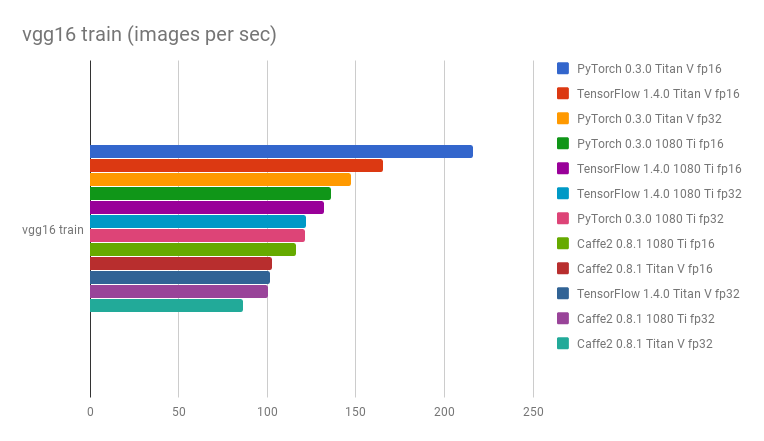

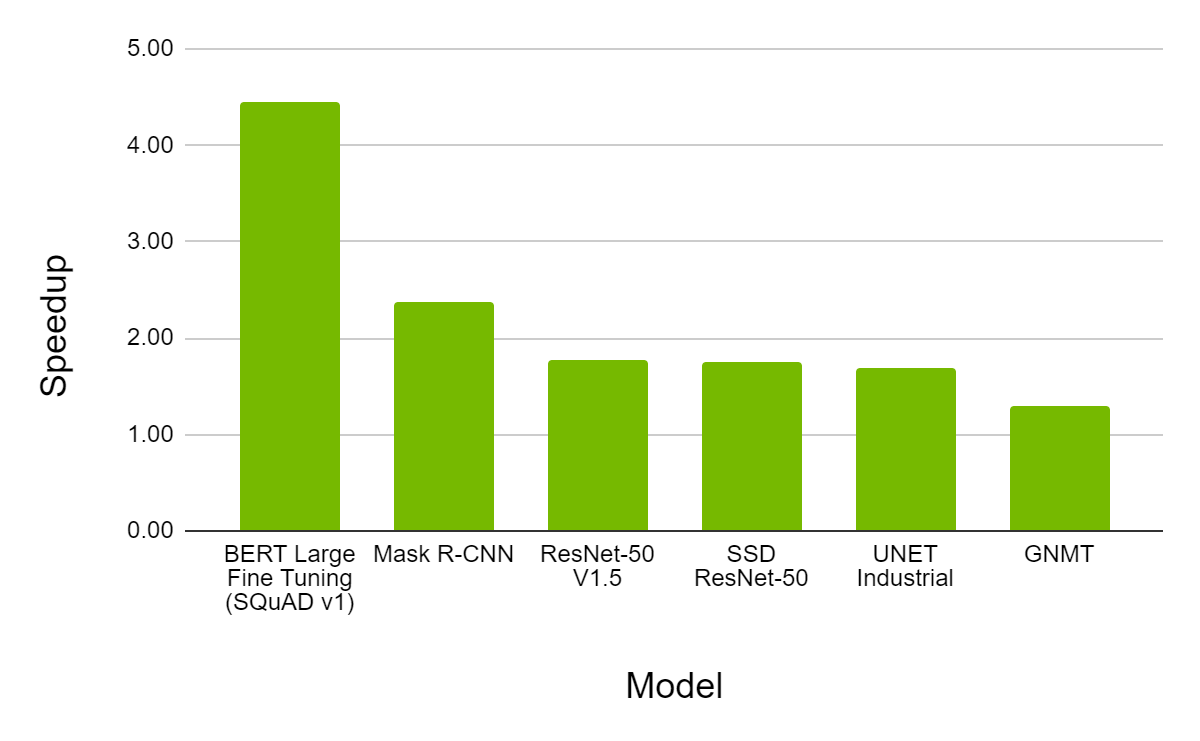

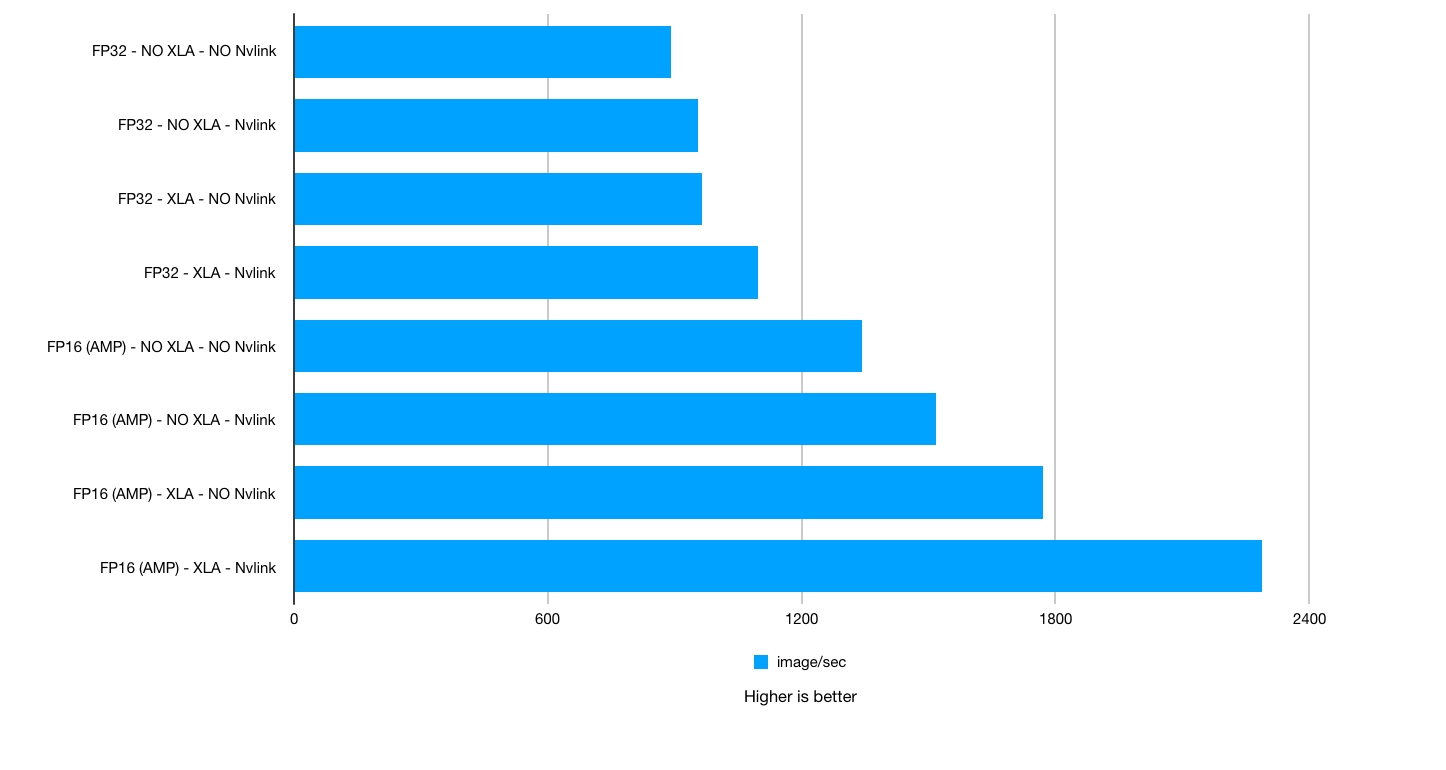

NVIDIA TITAN RTX Deep Learning Benchmarks 2019 – Performance improvements with XLA, AMP and NVLink in TensorFlow | BIZON Custom Workstation Computers, Servers. Best Workstation PCs and GPU servers for AI/ML, deep

![Educational Video] PyTorch, TensorFlow, Keras, ONNX, TensorRT, OpenVINO, AI Model File Conversion - YouTube Educational Video] PyTorch, TensorFlow, Keras, ONNX, TensorRT, OpenVINO, AI Model File Conversion - YouTube](https://i.ytimg.com/vi/bE1N7sq3xIA/maxresdefault.jpg)

Educational Video] PyTorch, TensorFlow, Keras, ONNX, TensorRT, OpenVINO, AI Model File Conversion - YouTube

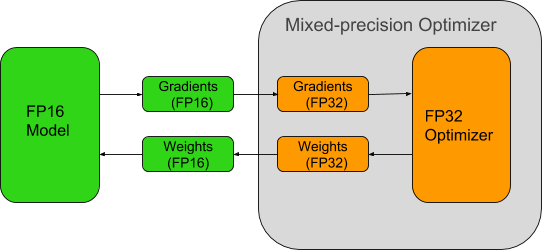

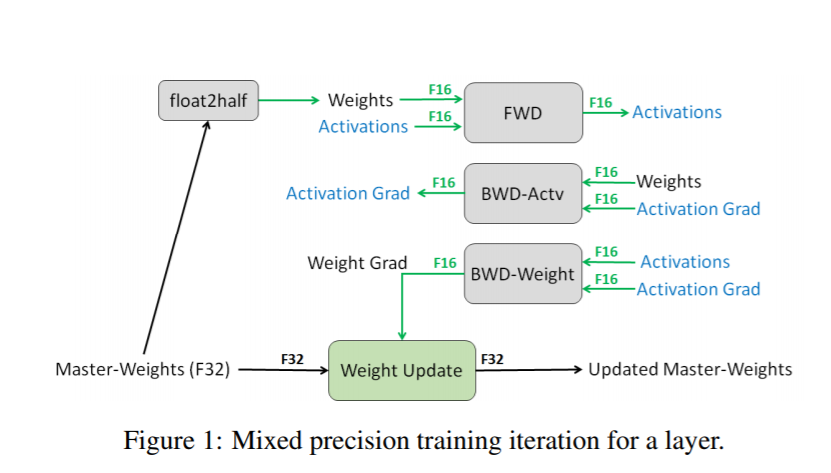

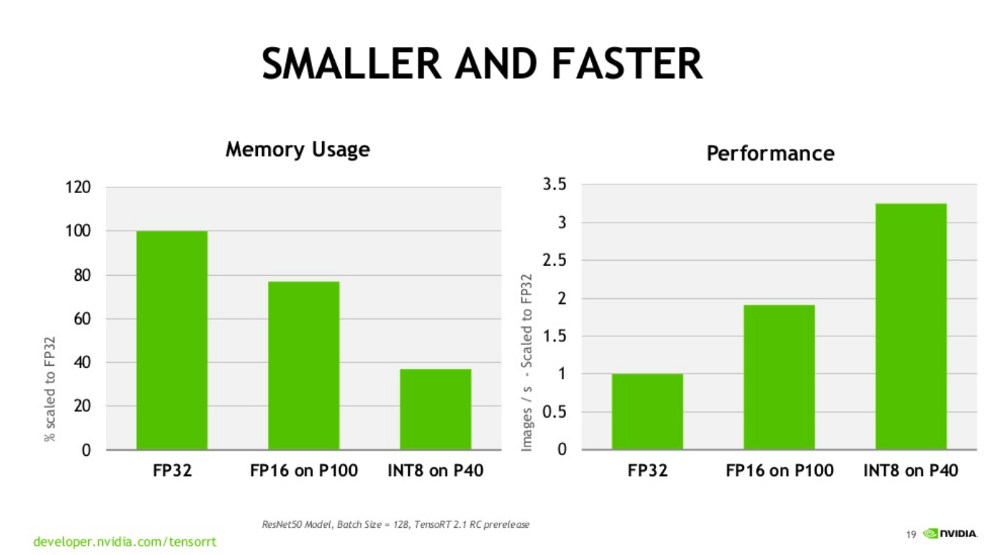

TensorFlow Model Optimization Toolkit — float16 quantization halves model size — The TensorFlow Blog